Troubleshooting

Integration errors

Connection problems

Test connection does not pass successfully

Why it could happen

- Credentials you provided aren't correct.

- No connection to the integrated system.

- API was updated on the integration side.

How to fix

Credentials you provided aren't correct

Check that you have copied/entered correct password or token required by the integration.

Tokens and passwords must have no spaces in the beginning and at the end, these are considered as characters. Adding spaces will make your password or token incorrect for sake of authentication.

Check that username string is what is expected by the integration:

- Check you are using correct string for the username.

- Carefully read the integration description, it states what exactly need to be used as a username. It could be username or email and in most cases these aren't interchangeable.

No connection to the integrated system

This requires involving your DevOps engineers or other engineers responsible for infrastructure.

If there is no connection to the integrated system, the Can't establish connection message can appear in the Allure TestOps interface.

To resolve the issue, follow these steps:

- Collect logs from testops service.

- Check the logs for errors such as

java.net.UnknownHostException.

If there are no such messages in the logs, try to enable debug logging for integrations:

Add a new environment variable

LOGGING_LEVEL_IO_QAMETA_ALLURE_TESTOPS_INTEGRATIONwith the valuedebug.Restart the instance (the service must receive the new parameters).

Retry the failed operation.

Collect logs from the testops service.

Check the logs for the following errors:

400 bad request;HTTP FAILED;java.net.UnknownHostException.

These errors usually have more detailed descriptions above and below the error line.

The

UnknownHostExceptionerror typically indicates that Allure TestOps cannot connect to the system specified in the integration settings.

If UnknownHostException occurs, then you either need to check you provided correct URL for the integration, or you need assistance from your network team to troubleshoot the issue.

If you are struggling with the comprehending of the log files, create Support request on our help portal using your corporate email address during the registration.

API was updated on the integration side

To resolve this issue, you will need to involve your DevOps engineers or other engineers responsible for infrastructure and contact Allure TestOps tech support. Additional development may be required, which could take a significant amount of time.

- Follow all the steps described in the No connection to the integrated system. Debug logging for integrations needs to be enabled.

- If the logs do not contain symptoms of troubles with the connections, create Support request on our help portal using your corporate email address during the registration.

Test cases

The test case tree is not displayed correctly

How to fix

Try migrating tree data to the new data structure using the API. If the problem persists, create a request on our help portal.

Test results

To make some phrases easier and shorter for reading we'll the term Allure TestOps agent to refer to the Allure TestOps integration plugin or allurectl command-line tool.

Test results are marked as "Orphaned"

Reason

The test results in Allure TestOps launch will be marked as "Orphaned" in the following cases:

AS_ID(ALLURE_ID) does not yet exist in the instance of Allure TestOps. This attribute is managed by Allure TestOps and you cannot use random IDs.- There is no valid

AS_ID(ALLURE_ID), and there is no full path (test case selector) in the test results. Full path (selector) is used to unmistakably match a test result to a test case. If you are using some third-party integration, it could disregard this parameter. This parameter is optional when working with Allure Report, but it's crucial for working with Allure TestOps.

Possible solution

No full path data

To be able to import the results containing no data on full path, the valid AS_ID (ALLURE_ID) attributes should be provided via code and be available in the test results.

You also need to check if you are using most recent dependencies in your project and then check the generation of the results again.

To check dependencies, please refer to the official project repositories: Allure Report on GitHub or the project documentation.

Incorrect AS_ID (ALLURE_ID) and no full path data

You can only assign an existing Allure ID to a via the code. If your test framework/PL aren't supported via JetBrains IDEs, you need to create a manual test case, copy its ID, and provide its ID to the code. When uploading the test results, this manual test case will be replaced with the data from the automated test case.

Missing results — Empty launch — Wrong or empty test results source directory

Symptom: If the launch is executed from CI, it will be empty. If the launch is executed from Allure TestOps, it will contain test results in the "In progress" status, which will change to "Unknown" after closing the launch. You need to check if you are referring to the correct test results directory.

This is to be checked in the settings of Allure TestOps agent used to upload the test results.

You also can execute ls -a command during the build for the path you used for settings of Allure TestOps agent if there are any test results in this directory. If there isn't any, then you need to check for the right paths.

How to fix

The only way to fix this is to point to the right directory with test results.

Check what is the right path where your tests save the results, then update settings for Allure TestOps agent.

Missing results — Empty launch — Files exist in CI system, paths are correct but files aren't uploaded

If you can see the following details in logs of Allure TestOps agent:

Launch [LL], job run [rr], session [ss]:files ignored [0], indexed [XX], processed [0], errors [XX]

or

[Allure] [xxx] Session [xx] total indexed [N], ignored [0], processed [0], errors [N]

where indexed is more than zero, processed is zero, and errors more than zero,

this means that before Allure TestOps agent started there were files in the allure-results directory and no files were added after the agent started. This situation happens, for example, when you're running your test, collect test results, copy them to the directory, and only then the Allure TestOps agents starts.

How to fix

If your test results are copied from somewhere before Allure TestOps agent starts and then no updates happen, you need to index and upload existing files.

For a plugin (e.g. for Jenkins, TeamCity) you need to add a parameter indexExistingFiles: true to index existing files.

withAllureUpload(indexExistingFiles: true, name: '${SOME_JOB_NAME}', projectId: '', serverId: '', tags: '') {

// ...

}

When using allurectl to upload existing files, you need to specify the allurectl upload command.

Missing results — Launch is not empty — Some results are missing — Muted tests

If you or someone from your team marks a test as muted, the results from these tests won't be shown in the launches.

Muted tests consequences

Muted test won't appear in the launches main page.

You can view the test results of a muted test if you open a launch containing this test and go the to the Tree tab.

In the test result card, at the bottom right, you will see the Unmute button.

Muted test won't participate in the analytics and statistics calculations.

Muted test will be marked as resolved even if no defect is linked to it.

How to check — Test cases — Filter

- Go to the Test cases section.

- Click the search box above the test case list.

- Select Muted as the search attribute and Yes as its value.

In the test case list, you will see all muted test cases. The Mutes section of each test case will display:

- information about the person marked the test case as muted;

- the date when it was done.

How to check — Test results — Test result card

In an open launch, you will see the active Unmute button in the bottom right corner of the test result card.

How to fix

In most cases, if a test is muted, you don’t need to fix anything, as it was intentionally done by someone from your team. Mute is one of the ways you resolve failures in your tests and is a normal part of working with Allure TestOps.

When the problem with a failing test is fixed, mute can be removed. Otherwise, you can ask a person that has muted a test, why did they do it.

Missing results — Size of a result file

There is a 2 000 000 bytes limit for a test result file (*-result.json).

If the size of a result file exceeds 2 000 000 bytes, such result file won't be processed but will be considered as an attachment by Allure TestOps. This is done intentionally to avoid failures due to insufficient resources when processing big amount of files.

How to check

Check your allure-results folder to see if there any *-result.json files with the size more than 2 000 000 bytes.

How to fix

Consider transferring some of the information you're adding directly into test results to attachments instead. Allure TestOps with the Allure framework under the hood allow adding textual information as strings or CSV tables. This will unload the system and for most of the cases will represent the information in a way better than just the information added to test case scenario.

Missing results — Errors during the upload

Tests could have missing results due to the settings of the network equipment (routers, firewalls) and network software (for example, reverse proxies such as nginx).

How to check

You either need your network administrator, DevOps engineer, or any other person responsible for the network configuration, reverse proxy configuration, firewalls configuration.

You need to check the following:

- reverse proxy server settings (if applicable) as described here:

- timeouts;

- data transfer limits.

- network timeouts on routers/firewalls similar to reverse proxy server timeouts;

- network settings on routers/firewalls similar to reverse proxy server limits for data transfer size;

- blocking rules (blacklists, whitelists, etc.).

How to fix

Try adjusting the timeout settings and data transfer limits to the recommended values on your network software or hardware. Configure the parameters to allow file transfers to Allure TestOps based on the workload you generate.

Missing test results — Retries

If multiple *-results.json files are generated for different tests, but only one test result is displayed in the launch after upload, this may be due to the following reasons:

- These tests belong to different projects in your code but have identical method signatures for some reason.

- The test is parameterized, but the parameters are not specified in the test results.

How to check

- Go to Launches.

- Find and open your launch.

- Go to the Tree tab.

- Open your test result.

- In the test result card, go to the Retries tab.

- If this tab contains retry attempts but you ran the test only once, you need to update your code.

How to fix

Non-parameterized test

If the test result has retries and this is not a parameterized test, you need to ensure that full paths of your tests differs. There are several ways to do this:

- Explicitly assign Allure ID to both of your tests in your code.

- Make sure that the test methods are named differently. If necessary, rename them.

For reliability, it is best to use both of these methods.

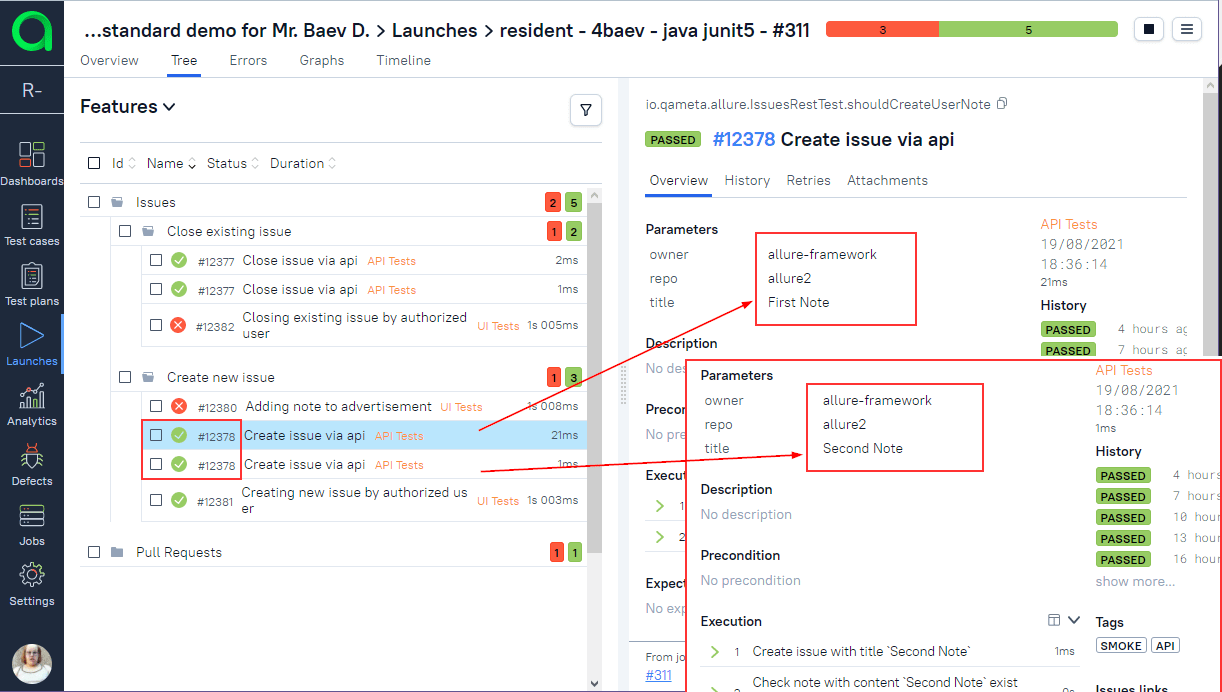

Parameterized test

If test result has retries and this is a parameterized test, you need explicitly provide the parameters to the test results, as some of the test frameworks do not do this by default.

Here is an example for Java:

<...>

private static final String OWNER = "test-framework";

private static final String REPO = "test-repository";

<...>

@Story("Create new issue")

@ParameterizedTest(name = "Create issue via api")

@ValueSource(strings = {"First Note", "Second Note"})

public void shouldCreateUserNote(String title) {

parameter("owner", OWNER);

parameter("repo", REPO);

parameter("title", title);

steps.createIssueWithTitle(OWNER, REPO, title);

steps.shouldSeeIssueWithTitle(OWNER, REPO, title);

}

For the example above, the information about the parameters owner, repo and title will land in the test result file in the parameters section:

"parameters": [

{

"name": "owner",

"value": "test-framework"

},

{

"name": "repo",

"value": "test-repository"

},

{

"name": "title",

"value": "First Note"

}

],

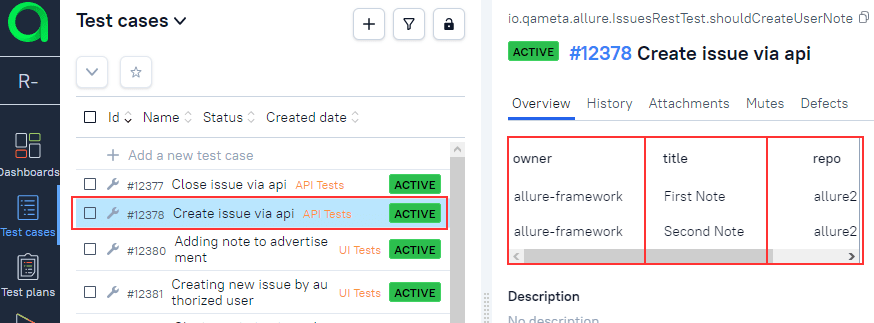

These parameters will be processed by Allure TestOps and for the same test case you will see several test results:

Then the following test case will be created:

Cropped values in the test result fields

When working with automated tests in Allure TestOps, please note that any field of an automated test can contain maximum 255 characters, other symbols will be cut off when loading the test result into Allure TestOps.

Automated tests are stuck on "In progress" status when run or rerun

This can happen if the received test result parameters or environment variables differ from the expected values.

For example, if you define two environment variables when running a test (OS=iOS and VER=123), but then the value of one of them changes during the run (for example, it is determined dynamically and therefore always has a different value), Allure TestOps will create a new test result upon receiving the result file and will still wait for a result with the original values (which will never arrive).

The same will happen if Allure TestOps receives a test result with a different parameter value.

If you use test parameters or environment variables to store dynamic values, we recommend using attachments for this purpose instead. Alternatively, some integration modules allow you to mark parameters as excluded so that changing their values does not lead to creating a new test result instead of one you expect to receive.

Test results attachments

Attachments are missing in Allure TestOps but are available in Allure Report

I can see attachments in Allure Report, but I cannot see them in Allure TestOps

How to check: attachments in folders

Go to the source of your test result (CI pipeline, local folder, IDE project, etc.).

For this example, we're assuming the results are stored in the allure-results folder.

Check that all attachments are located in the root of allure-results folder. If your allure-results contains subfolders with attachments, then it is correct that you don't have them in Allure TestOps.

How to fix: attachments in folders

Configure your project in a way when all files are stored in the allure-results folder without subfolders.

Allure Report will render the attachments as if it works with files on a file system. Meanwhile, Allure TestOps has no information about folders, and the path to an attachment file will be considered as part of the file name. Consequently, the attachment won't be linked to the test results and will be deleted by Allure TestOps as not linked to any test results.

How to check: allurectl configuration

Check the settings on the CI system side for allurectl. If the skip attachments option is used, then allurectl will skip the upload for attachments of successfully passed files.

How to fix: allurectl configuration

Use the test results from the CI pipeline or temporary enable the upload of the attachments for successfully passed tests.

How to check: cleanup rules

Allure TestOps has the internal routine that deletes old artifacts to free up the storage. This could be a reason you cannot see the attachments for earlier launches and test results.

How to fix: cleanup rules

If attachments were deleted from the Allure TestOps storage, you can use attachments from newer launches or gather them from the CI artifacts.

Services do not start

Allure TestOps service does not start (database lock)

If you find one of the following messages in the log:

org.springframework.beans.factory.BeanCreationException: Error creating bean with name 'liquibase' defined in class path resource org.

springframework.beans.factory.BeanCreationException: **Error creating bean with name 'liquibase'** defined in class path resource [org/springframework/boot/autoconfigure/liquibase/LiquibaseAutoConfiguration$LiquibaseConfiguration.class]:

Invocation of init method failed;

nested exception is liquibase.exception.LockException:

Could not acquire change log lock.

liquibase.lockservice: Waiting for changelog lock...

liquibase.lockservice: Waiting for changelog lock...

liquibase.lockservice: Waiting for changelog lock...

liquibase.lockservice: Waiting for changelog lock...

it means that the database is locked and the service cannot access it. It can happen if the service was abruptly shutdown due to the infrastructure or network failure.

To fix this:

Stop the Allure TestOps service.

Docker Compose example:

docker compose stop testopsSign in to the testops database using psql (

psql -h <host> -U <user> <database name>):psql -h 127.0.0.1 -U testops testopsMake the following query to display all database locks:

SELECT * FROM databasechangeloglock;Find and remove the lock that is preventing the services from starting:

UPDATE databasechangeloglock SET locked=FALSE, lockgranted=NULL, lockedby=NULL WHERE id=1;Start the previously stopped Allure TestOps services.

Docker Compose example:

docker compose start testops

Allure TestOps service crashes or uses too much CPU when making a large number of API calls

If you use an API token that you have created in the user menu to access the API, you need to switch to OAuth authentication (using JWT as Bearer token). See API Authentication for more information.

Errors in Allure TestOps interface with code 409

"Request failed with status code 409" error when trying to move a test case to another project

This can happen when an automated test case with the same attributes already exists in the target project.

Example:

- You upload two test results to Project A. Allure TestOps creates two new test cases with IDs 1 and 2.

- You move one of the created test cases (ID = 2) to Project B.

- You upload results for the same two test cases to Project A. One test case already exists in Project A (ID = 1), but the second one was moved, so a new test case is created (ID = 3).

Now, if you try to move the test case with ID = 2 from Project B back to Project A, an error will occur because an identical test case (except for the ID) already exists in Project A.

Getting binary files for the deployment

Cannot download packages from qameta.jfrog.io

We have moved all our packages to dl.qameta.io.

You can find the updated installation instructions here. Your old access credentials should work, but if they don't, contact our support.

Cannot sign in to Docker Desktop using Qameta credentials

This is the correct behavior. The credentials you received from the Qameta sales team should only be used with the Docker command-line tool to download the Allure TestOps images as described in this article.

User sign-in issues

Cannot sign in to Allure TestOps as administrator

Make sure that you are trying to sign in using the built-in administrator account and not a third-party authentication account:

If you use an IdP to authenticate users (LDAP, SAML 2.0, OpenID) as the main option for the authentication, then the sign-in to the system with the local account needs to be done using

https://<URL>/login/systemaddress.If you use the server version of Allure TestOps, you can find the password in the configuration files of your instance.

If you use the cloud version of Allure TestOps, you can find the password in the message you received upon creating your instance.

Error 401: CSRF Token Missing

If you see the error "Request failed with status code 401. The expected CSRF token could not be found" in the browser's developer tools (DevTools) when a user cannot sign in to Allure TestOps, the issue is most likely caused by a "stuck session" in Redis.

Redis is used for storing user sessions. A "stuck session" can occur due to differences in time zones. For example, Allure TestOps operates in one time zone, the user's computer is in another, and the user is also connected via a VPN endpoint in a third time zone. This combination can result in a session that is either expired before it is created or not yet active from the perspective of the Allure TestOps instance.

To resolve the issue:

Run the following command:

redis-cli FLUSHALLThis command will clear all session data stored in Redis. As a result, user sessions will be reset and all users will be signed out.

Alternatively, you can restart the Redis service. This will have the same effect as the

FLUSHALLcommand.Allure TestOps does not store any persistent data in Redis, so restarting it will only remove user session data.

Both actions will cause user sessions to be reset. Consider informing users in advance or performing actions after business hours.

allurectl

For troubleshooting allurectl please refer to allurectl dedicated page.