Working with test results

When you close a launch (see the sections on running a manual test case, running an automates test case, and creating a combined launch), all the test results from the launch are reflected in other sections of Allure TestOps. This includes the Test cases and Dashboards sections.

Resolving unsuccessful test results in a launch

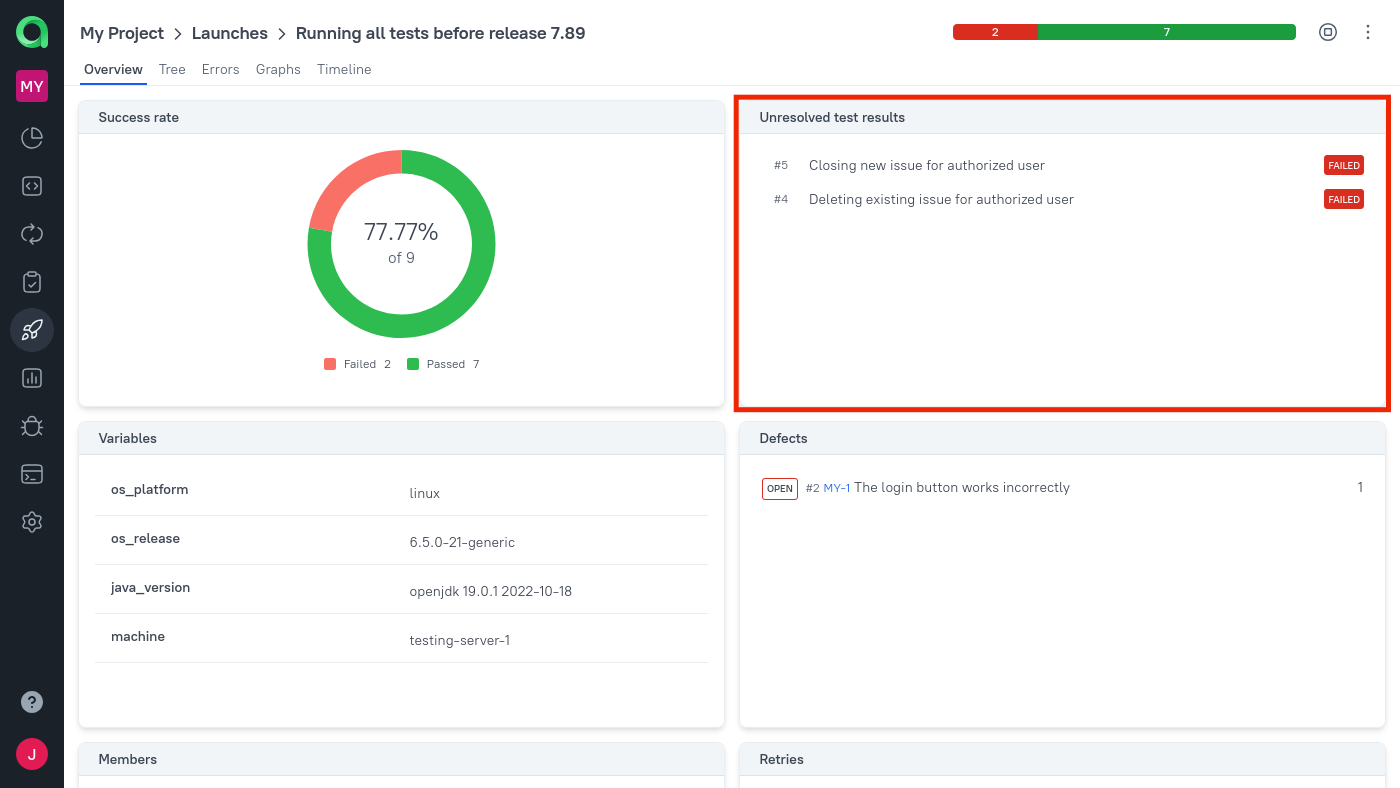

After you create a test launch and add test results to it (by either specifying them manually or uploading data) but before you close the launch, we recommend to resolve all unsuccessful test results. To resolve a test result just means to make sure that you understand why it did not pass in this test launch or, at least, that it is already being investigated. To do so, pay attention to the Unresolved test results widget.

If you think of the launch overview as a documentation about your project's quality, then the Unresolved test results widget is a checklist for completing the documentation.

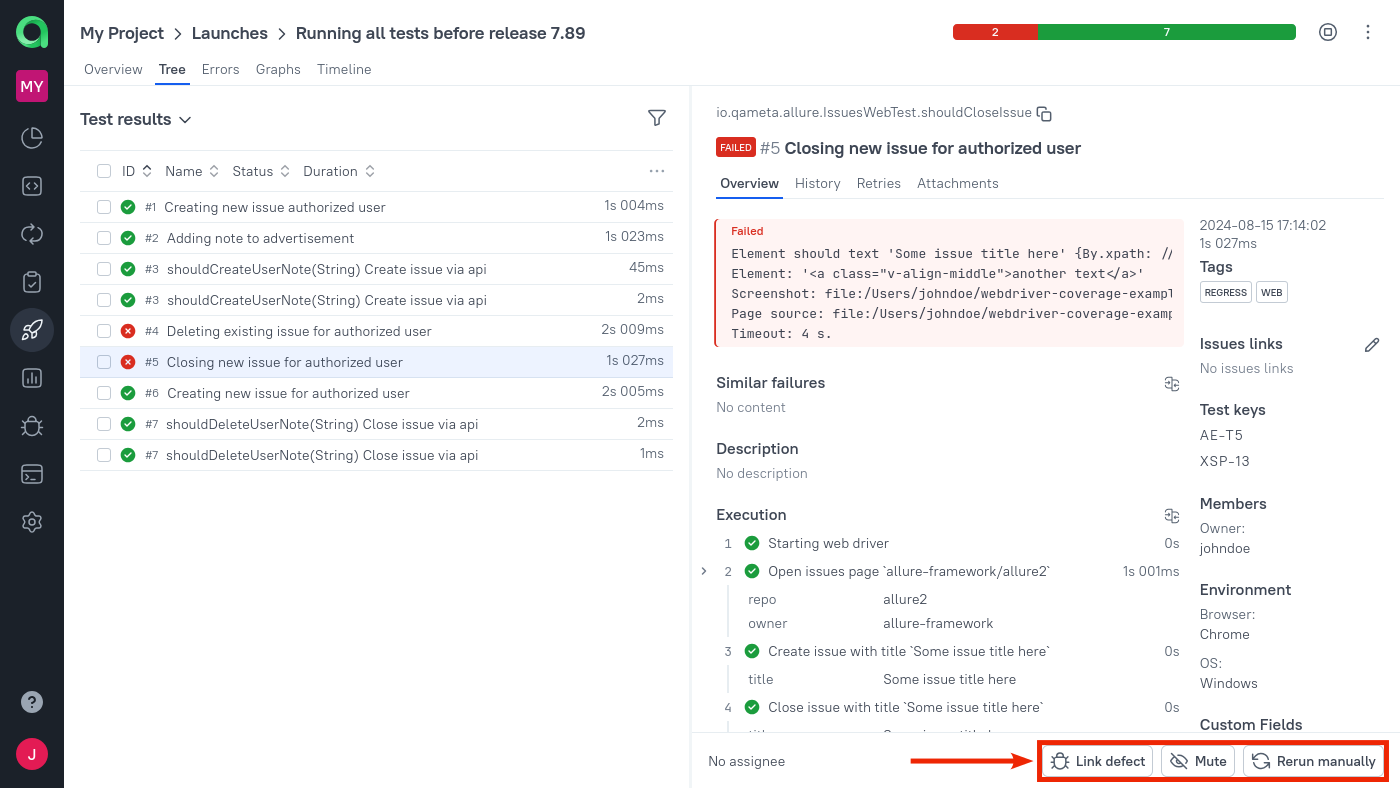

The three possible ways to resolve a test result are represented by the three buttons under its details.

Link the test result to a defect. A defect represents a known problem in either the product or the tests. This is similar to creating an issue in your project's issue tracker — in fact, you can enable an integration with an issue tracker to sync a defect's status with an external issue's status.

Mute the test. If the unsuccessful test result is not important enough to deal with it right now, you can stop seeing it by muting the test case. Note that the mute applies to the test case, not just to the current result. A muted test will be excluded from the launch's statistics: for example, if you had 9/10 tests passed, and you muted the unsuccessful one, it will look like you have 9/9 tests passed.

Rerun the test manually. If the later attempt passes, Allure TestOps will mark the issue as resolved and display the rerun information in the Retries widget. Attempts can be added not only by rerunning tests manually, but also by uploading new automated test results. The rerun method choice is independent of which method you used previously.

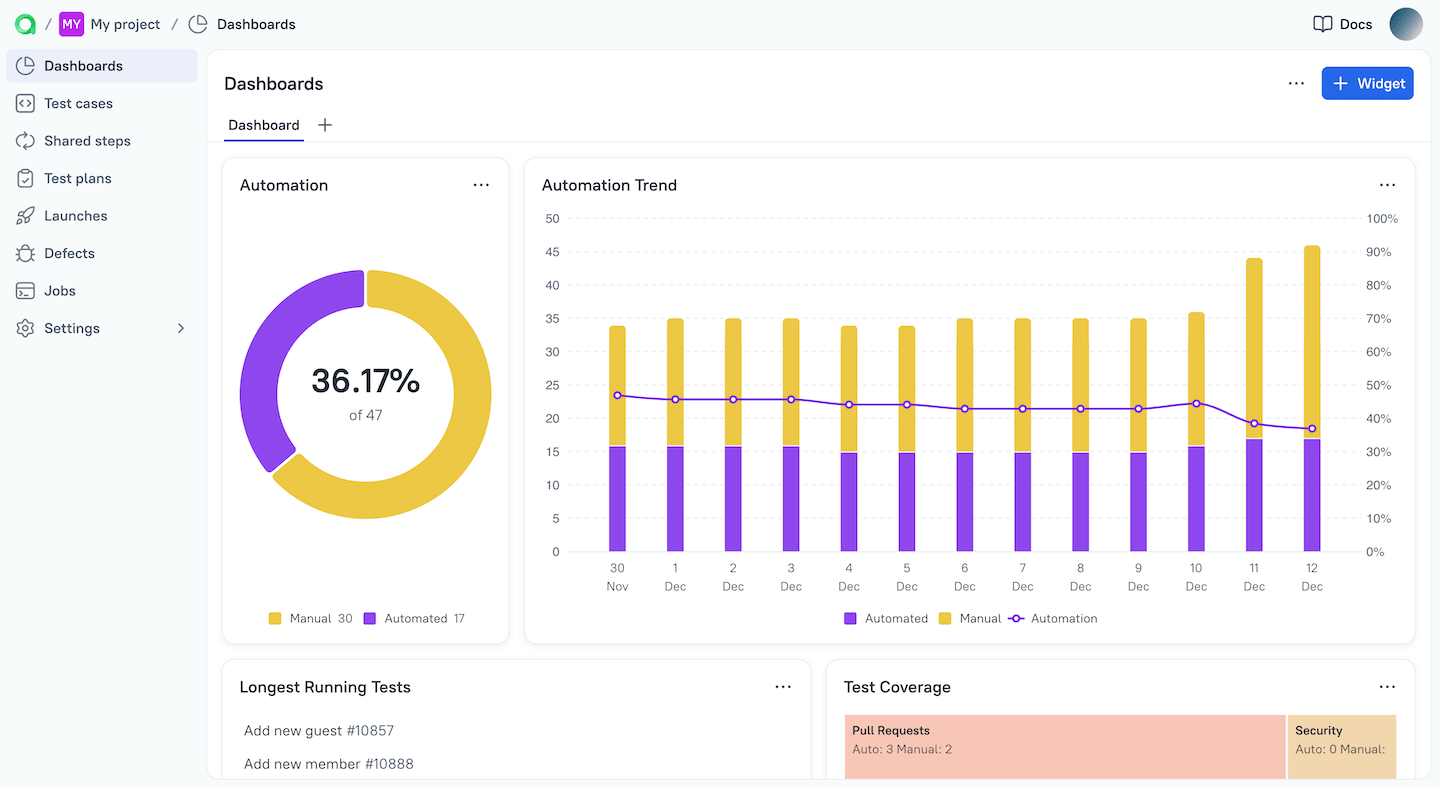

Analyzing trends on dashboards

Dashboards are collections of widgets that help you visualize the current state of your project and identify various trends. Most of the widgets additionally allow you to use Allure Query Language to filter the data. You can create multiple dashboards to cover different aspects of your project.

To learn more on how to work with dashboards, see Dashboards.